GWAS Tutorial#

This notebook is an sgkit port of Hail’s GWAS Tutorial, which demonstrates how to run a genome-wide SNP association test. Readers are encouraged to read the Hail tutorial alongside this one for more background, and to see the motivation behind some of the steps.

Note that some of the results do not exactly match the output from Hail. Also, since sgkit is still a 0.x release, its API is still subject to non-backwards compatible changes.

import sgkit as sg

Before using sgkit, we import some standard Python libraries and set the Xarray display options to not show all the attributes in a dataset by default.

import numpy as np

import pandas as pd

import xarray as xr

xr.set_options(display_expand_attrs=False, display_expand_data_vars=True);

Download public 1000 Genomes data#

We use the same small (20MB) portion of the public 1000 Genomes data that Hail uses.

First, download the file locally:

from pathlib import Path

import requests

if not Path("1kg.vcf.bgz").exists():

response = requests.get("https://storage.googleapis.com/sgkit-data/tutorial/1kg.vcf.bgz")

with open("1kg.vcf.bgz", "wb") as f:

f.write(response.content)

if not Path("1kg.vcf.bgz.tbi").exists():

response = requests.get("https://storage.googleapis.com/sgkit-data/tutorial/1kg.vcf.bgz.tbi")

with open("1kg.vcf.bgz.tbi", "wb") as f:

f.write(response.content)

Importing data from VCF#

Next, convert the VCF file to Zarr using the vcf2zarr command in bio2zarr, stored on the local filesystem in a directory called 1kg.vcz.

%%bash

vcf2zarr explode --force 1kg.vcf.bgz 1kg.icf

# vcf2zarr mkschema 1kg.icf > 1kg.schema.json # then edit 1kg.schema.json by hand

vcf2zarr encode --force -s 1kg.schema.json 1kg.icf 1kg.vcz

Scan: 0%| | 0.00/1.00 [00:00<?, ?files/s]

[W::bcf_hdr_check_sanity] PL should be declared as Number=G

Scan: 0%| | 0.00/1.00 [00:00<?, ?files/s]

Scan: 0%| | 0.00/1.00 [00:00<?, ?files/s]

Scan: 100%|██████████| 1.00/1.00 [00:00<00:00, 4.02files/s]

Explode: 0%| | 0.00/10.9k [00:00<?, ?vars/s]

Explode: 0%| | 0.00/10.9k [00:00<?, ?vars/s]

Explode: 0%| | 0.00/10.9k [00:00<?, ?vars/s]

Explode: 2%|▏ | 226/10.9k [00:00<00:04, 2.22kvars/s]

Explode: 4%|▎ | 395/10.9k [00:00<00:05, 1.93kvars/s]

Explode: 6%|▌ | 631/10.9k [00:00<00:04, 2.07kvars/s]

Explode: 8%|▊ | 867/10.9k [00:00<00:04, 2.14kvars/s]

Explode: 10%|▉ | 1.05k/10.9k [00:01<00:14, 661vars/s]

Explode: 12%|█▏ | 1.28k/10.9k [00:01<00:12, 796vars/s]

Explode: 14%|█▍ | 1.52k/10.9k [00:01<00:10, 924vars/s]

Explode: 16%|█▌ | 1.75k/10.9k [00:01<00:08, 1.03kvars/s]

Explode: 18%|█▊ | 1.94k/10.9k [00:02<00:13, 657vars/s]

Explode: 20%|██ | 2.19k/10.9k [00:02<00:11, 750vars/s]

Explode: 22%|██▏ | 2.43k/10.9k [00:03<00:10, 839vars/s]

Explode: 24%|██▍ | 2.61k/10.9k [00:03<00:13, 622vars/s]

Explode: 26%|██▌ | 2.85k/10.9k [00:03<00:11, 697vars/s]

Explode: 28%|██▊ | 3.10k/10.9k [00:04<00:10, 774vars/s]

Explode: 30%|███ | 3.28k/10.9k [00:04<00:12, 617vars/s]

Explode: 32%|███▏ | 3.52k/10.9k [00:05<00:10, 685vars/s]

Explode: 35%|███▍ | 3.76k/10.9k [00:05<00:09, 756vars/s]

Explode: 36%|███▋ | 3.96k/10.9k [00:05<00:11, 616vars/s]

Explode: 38%|███▊ | 4.16k/10.9k [00:06<00:10, 665vars/s]

Explode: 40%|████ | 4.41k/10.9k [00:06<00:08, 732vars/s]

Explode: 42%|████▏ | 4.58k/10.9k [00:06<00:10, 597vars/s]

Explode: 44%|████▍ | 4.82k/10.9k [00:07<00:09, 657vars/s]

Explode: 46%|████▌ | 5.00k/10.9k [00:07<00:08, 697vars/s]

Explode: 48%|████▊ | 5.17k/10.9k [00:07<00:09, 602vars/s]

Explode: 50%|████▉ | 5.41k/10.9k [00:07<00:08, 663vars/s]

Explode: 51%|█████▏ | 5.58k/10.9k [00:08<00:09, 577vars/s]

Explode: 53%|█████▎ | 5.82k/10.9k [00:08<00:07, 636vars/s]

Explode: 55%|█████▍ | 5.98k/10.9k [00:09<00:08, 568vars/s]

Explode: 57%|█████▋ | 6.22k/10.9k [00:09<00:07, 626vars/s]

Explode: 59%|█████▉ | 6.45k/10.9k [00:09<00:06, 682vars/s]

Explode: 61%|██████ | 6.61k/10.9k [00:10<00:07, 586vars/s]

Explode: 63%|██████▎ | 6.86k/10.9k [00:10<00:06, 644vars/s]

Explode: 65%|██████▍ | 7.02k/10.9k [00:10<00:05, 678vars/s]

Explode: 66%|██████▌ | 7.19k/10.9k [00:11<00:06, 582vars/s]

Explode: 68%|██████▊ | 7.43k/10.9k [00:11<00:05, 640vars/s]

Explode: 70%|██████▉ | 7.59k/10.9k [00:11<00:04, 673vars/s]

Explode: 71%|███████▏ | 7.75k/10.9k [00:12<00:05, 577vars/s]

Explode: 73%|███████▎ | 7.92k/10.9k [00:12<00:04, 614vars/s]

Explode: 74%|███████▍ | 8.07k/10.9k [00:12<00:04, 565vars/s]

Explode: 76%|███████▌ | 8.26k/10.9k [00:12<00:04, 608vars/s]

Explode: 77%|███████▋ | 8.42k/10.9k [00:13<00:04, 560vars/s]

Explode: 79%|███████▉ | 8.60k/10.9k [00:13<00:03, 601vars/s]

Explode: 80%|████████ | 8.75k/10.9k [00:13<00:03, 552vars/s]

Explode: 83%|████████▎ | 8.99k/10.9k [00:14<00:03, 610vars/s]

Explode: 84%|████████▍ | 9.13k/10.9k [00:14<00:03, 553vars/s]

Explode: 86%|████████▌ | 9.33k/10.9k [00:14<00:02, 600vars/s]

Explode: 87%|████████▋ | 9.48k/10.9k [00:15<00:02, 547vars/s]

Explode: 89%|████████▊ | 9.63k/10.9k [00:15<00:02, 577vars/s]

Explode: 90%|████████▉ | 9.76k/10.9k [00:15<00:02, 537vars/s]

Explode: 92%|█████████▏| 9.97k/10.9k [00:15<00:01, 589vars/s]

Explode: 93%|█████████▎| 10.1k/10.9k [00:16<00:01, 541vars/s]

Explode: 95%|█████████▍| 10.3k/10.9k [00:16<00:01, 580vars/s]

Explode: 96%|█████████▌| 10.4k/10.9k [00:17<00:00, 530vars/s]

Explode: 97%|█████████▋| 10.5k/10.9k [00:17<00:00, 504vars/s]

Explode: 98%|█████████▊| 10.6k/10.9k [00:17<00:00, 478vars/s]

Explode: 100%|██████████| 10.9k/10.9k [00:17<00:00, 539vars/s]

Explode: 100%|██████████| 10.9k/10.9k [00:18<00:00, 601vars/s]

Encode: 0%| | 0.00/28.9M [00:00<?, ?B/s]

Encode: 0%| | 120k/28.9M [00:00<00:25, 1.12MB/s]

Encode: 6%|▋ | 1.86M/28.9M [00:00<00:02, 9.30MB/s]

Encode: 12%|█▏ | 3.60M/28.9M [00:00<00:02, 12.1MB/s]

Encode: 18%|█▊ | 5.33M/28.9M [00:00<00:01, 13.5MB/s]

Encode: 25%|██▍ | 7.09M/28.9M [00:00<00:01, 14.4MB/s]

Encode: 33%|███▎ | 9.59M/28.9M [00:00<00:01, 16.6MB/s]

Encode: 40%|███▉ | 11.5M/28.9M [00:01<00:02, 6.98MB/s]

Encode: 46%|████▌ | 13.2M/28.9M [00:01<00:02, 7.14MB/s]

Encode: 52%|█████▏ | 14.9M/28.9M [00:01<00:01, 7.32MB/s]

Encode: 57%|█████▋ | 16.5M/28.9M [00:02<00:01, 7.44MB/s]

Encode: 62%|██████▏ | 18.0M/28.9M [00:02<00:01, 7.88MB/s]

Encode: 67%|██████▋ | 19.4M/28.9M [00:02<00:01, 8.28MB/s]

Encode: 73%|███████▎ | 21.0M/28.9M [00:02<00:00, 8.83MB/s]

Encode: 79%|███████▊ | 22.7M/28.9M [00:02<00:00, 9.37MB/s]

Encode: 100%|██████████| 28.9M/28.9M [00:02<00:00, 11.1MB/s]

Finalise: 0%| | 0.00/32.0 [00:00<?, ?array/s]

Finalise: 100%|██████████| 32.0/32.0 [00:00<00:00, 3.07karray/s]

We used the vcf2zarr explode command to first convert the VCF to an “intermediate columnar format” (ICF), then the vcf2zarr encode command to convert the ICF to Zarr, which by convention is stored in a directory with a vcz extension.

Note that we specified a JSON schema file that was created with the vcf2zarr mkschema command (commented out above), then edited to drop some fields that are not needed for this tutorial (such as FORMAT/PL). It was also updated to change the call_AD field’s third dimension to be alleles, which was not set by vcf2zarr since the dataset we are using defines FORMAT/AD as . which means “unknown”, rather than R.

For more information about using vcf2zarr, see the tutorial in the bio2zarr documentation.

Now the data has been written as Zarr, all downstream operations on will be much faster. Note that sgkit uses an Xarray dataset to represent the VCF data, where Hail uses MatrixTable.

ds = sg.load_dataset("1kg.vcz")

Getting to know our data#

To start with we’ll look at some summary data from the dataset.

The simplest thing is to look at the dimensions and data variables in the Xarray dataset.

ds

<xarray.Dataset> Size: 29MB

Dimensions: (variants: 10879, samples: 284, alleles: 2,

ploidy: 2, contigs: 84, filters: 1,

region_index_values: 33, region_index_fields: 6)

Dimensions without coordinates: variants, samples, alleles, ploidy, contigs,

filters, region_index_values,

region_index_fields

Data variables: (12/38)

call_AD (variants, samples, alleles) int8 6MB dask.array<chunksize=(1000, 284, 2), meta=np.ndarray>

call_DP (variants, samples) int8 3MB dask.array<chunksize=(1000, 284), meta=np.ndarray>

call_GQ (variants, samples) int8 3MB dask.array<chunksize=(1000, 284), meta=np.ndarray>

call_genotype (variants, samples, ploidy) int8 6MB dask.array<chunksize=(1000, 284, 2), meta=np.ndarray>

call_genotype_mask (variants, samples, ploidy) bool 6MB dask.array<chunksize=(1000, 284, 2), meta=np.ndarray>

call_genotype_phased (variants, samples) bool 3MB dask.array<chunksize=(1000, 284), meta=np.ndarray>

... ...

variant_id (variants) object 87kB dask.array<chunksize=(1000,), meta=np.ndarray>

variant_id_mask (variants) bool 11kB dask.array<chunksize=(1000,), meta=np.ndarray>

variant_length (variants) int8 11kB dask.array<chunksize=(1000,), meta=np.ndarray>

variant_position (variants) int32 44kB dask.array<chunksize=(1000,), meta=np.ndarray>

variant_quality (variants) float32 44kB dask.array<chunksize=(1000,), meta=np.ndarray>

variant_set (variants) object 87kB dask.array<chunksize=(1000,), meta=np.ndarray>

Attributes: (3)- variants: 10879

- samples: 284

- alleles: 2

- ploidy: 2

- contigs: 84

- filters: 1

- region_index_values: 33

- region_index_fields: 6

- call_AD(variants, samples, alleles)int8dask.array<chunksize=(1000, 284, 2), meta=np.ndarray>

- description :

Array Chunk Bytes 5.89 MiB 554.69 kiB Shape (10879, 284, 2) (1000, 284, 2) Dask graph 11 chunks in 2 graph layers Data type int8 numpy.ndarray - call_DP(variants, samples)int8dask.array<chunksize=(1000, 284), meta=np.ndarray>

- description :

Array Chunk Bytes 2.95 MiB 277.34 kiB Shape (10879, 284) (1000, 284) Dask graph 11 chunks in 2 graph layers Data type int8 numpy.ndarray - call_GQ(variants, samples)int8dask.array<chunksize=(1000, 284), meta=np.ndarray>

- description :

Array Chunk Bytes 2.95 MiB 277.34 kiB Shape (10879, 284) (1000, 284) Dask graph 11 chunks in 2 graph layers Data type int8 numpy.ndarray - call_genotype(variants, samples, ploidy)int8dask.array<chunksize=(1000, 284, 2), meta=np.ndarray>

- description :

Array Chunk Bytes 5.89 MiB 554.69 kiB Shape (10879, 284, 2) (1000, 284, 2) Dask graph 11 chunks in 2 graph layers Data type int8 numpy.ndarray - call_genotype_mask(variants, samples, ploidy)booldask.array<chunksize=(1000, 284, 2), meta=np.ndarray>

- description :

Array Chunk Bytes 5.89 MiB 554.69 kiB Shape (10879, 284, 2) (1000, 284, 2) Dask graph 11 chunks in 2 graph layers Data type bool numpy.ndarray - call_genotype_phased(variants, samples)booldask.array<chunksize=(1000, 284), meta=np.ndarray>

- description :

Array Chunk Bytes 2.95 MiB 277.34 kiB Shape (10879, 284) (1000, 284) Dask graph 11 chunks in 2 graph layers Data type bool numpy.ndarray - contig_id(contigs)objectdask.array<chunksize=(84,), meta=np.ndarray>

Array Chunk Bytes 672 B 672 B Shape (84,) (84,) Dask graph 1 chunks in 2 graph layers Data type object numpy.ndarray - contig_length(contigs)float64dask.array<chunksize=(84,), meta=np.ndarray>

Array Chunk Bytes 672 B 672 B Shape (84,) (84,) Dask graph 1 chunks in 2 graph layers Data type float64 numpy.ndarray - filter_description(filters)objectdask.array<chunksize=(1,), meta=np.ndarray>

Array Chunk Bytes 8 B 8 B Shape (1,) (1,) Dask graph 1 chunks in 2 graph layers Data type object numpy.ndarray - filter_id(filters)objectdask.array<chunksize=(1,), meta=np.ndarray>

Array Chunk Bytes 8 B 8 B Shape (1,) (1,) Dask graph 1 chunks in 2 graph layers Data type object numpy.ndarray - region_index(region_index_values, region_index_fields)int32dask.array<chunksize=(33, 6), meta=np.ndarray>

Array Chunk Bytes 792 B 792 B Shape (33, 6) (33, 6) Dask graph 1 chunks in 2 graph layers Data type int32 numpy.ndarray - sample_id(samples)objectdask.array<chunksize=(284,), meta=np.ndarray>

Array Chunk Bytes 2.22 kiB 2.22 kiB Shape (284,) (284,) Dask graph 1 chunks in 2 graph layers Data type object numpy.ndarray - variant_AC(variants)int16dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 21.25 kiB 1.95 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type int16 numpy.ndarray - variant_AF(variants)float32dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 42.50 kiB 3.91 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type float32 numpy.ndarray - variant_AN(variants)int16dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 21.25 kiB 1.95 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type int16 numpy.ndarray - variant_BaseQRankSum(variants)float32dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 42.50 kiB 3.91 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type float32 numpy.ndarray - variant_ClippingRankSum(variants)float32dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 42.50 kiB 3.91 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type float32 numpy.ndarray - variant_DP(variants)int32dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 42.50 kiB 3.91 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type int32 numpy.ndarray - variant_DS(variants)booldask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 10.62 kiB 0.98 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type bool numpy.ndarray - variant_FS(variants)float32dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 42.50 kiB 3.91 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type float32 numpy.ndarray - variant_HaplotypeScore(variants)float32dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 42.50 kiB 3.91 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type float32 numpy.ndarray - variant_InbreedingCoeff(variants)float32dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 42.50 kiB 3.91 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type float32 numpy.ndarray - variant_MLEAC(variants)int16dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 21.25 kiB 1.95 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type int16 numpy.ndarray - variant_MLEAF(variants)float32dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 42.50 kiB 3.91 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type float32 numpy.ndarray - variant_MQ(variants)float32dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 42.50 kiB 3.91 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type float32 numpy.ndarray - variant_MQ0(variants)int8dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 10.62 kiB 0.98 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type int8 numpy.ndarray - variant_MQRankSum(variants)float32dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 42.50 kiB 3.91 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type float32 numpy.ndarray - variant_QD(variants)float32dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 42.50 kiB 3.91 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type float32 numpy.ndarray - variant_ReadPosRankSum(variants)float32dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 42.50 kiB 3.91 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type float32 numpy.ndarray - variant_allele(variants, alleles)objectdask.array<chunksize=(1000, 2), meta=np.ndarray>

- description :

- List of the reference and alternate alleles

Array Chunk Bytes 169.98 kiB 15.62 kiB Shape (10879, 2) (1000, 2) Dask graph 11 chunks in 2 graph layers Data type object numpy.ndarray - variant_contig(variants)int8dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

- An identifier from the reference genome or an angle-bracketed ID string pointing to a contig in the assembly file

Array Chunk Bytes 10.62 kiB 0.98 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type int8 numpy.ndarray - variant_filter(variants, filters)booldask.array<chunksize=(1000, 1), meta=np.ndarray>

- description :

- Filter status of the variant

Array Chunk Bytes 10.62 kiB 0.98 kiB Shape (10879, 1) (1000, 1) Dask graph 11 chunks in 2 graph layers Data type bool numpy.ndarray - variant_id(variants)objectdask.array<chunksize=(1000,), meta=np.ndarray>

- description :

- List of unique identifiers where applicable

Array Chunk Bytes 84.99 kiB 7.81 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type object numpy.ndarray - variant_id_mask(variants)booldask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 10.62 kiB 0.98 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type bool numpy.ndarray - variant_length(variants)int8dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

- The length of the variant measured in bases

Array Chunk Bytes 10.62 kiB 0.98 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type int8 numpy.ndarray - variant_position(variants)int32dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

- The reference position

Array Chunk Bytes 42.50 kiB 3.91 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type int32 numpy.ndarray - variant_quality(variants)float32dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

- Phred-scaled quality score

Array Chunk Bytes 42.50 kiB 3.91 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type float32 numpy.ndarray - variant_set(variants)objectdask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 84.99 kiB 7.81 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type object numpy.ndarray

- source :

- bio2zarr-0.1.6

- vcf_meta_information :

- [['fileformat', 'VCFv4.2'], ['hailversion', '0.2-29fbaeaf265e']]

- vcf_zarr_version :

- 0.4

Next we’ll use display_genotypes to show the the first and last few variants and samples.

Note: sgkit does not store the contig names in an easily accessible form, so we compute a variable variant_contig_name in the same dataset storing them for later use, and set an index so we can see the variant name, position, and ID.

ds["variant_contig_name"] = ds.contig_id[ds.variant_contig]

ds2 = ds.set_index({"variants": ("variant_contig_name", "variant_position", "variant_id")})

sg.display_genotypes(ds2, max_variants=10, max_samples=5)

| samples | HG00096 | HG00099 | ... | NA21133 | NA21143 |

|---|---|---|---|---|---|

| variants | |||||

| (1, 904165, .) | 0/0 | 0/0 | ... | 0/0 | 0/0 |

| (1, 909917, .) | 0/0 | 0/0 | ... | 0/0 | 0/0 |

| (1, 986963, .) | 0/0 | 0/0 | ... | 0/0 | 0/0 |

| (1, 1563691, .) | ./. | 0/0 | ... | 0/0 | 0/0 |

| (1, 1707740, .) | 0/1 | 0/1 | ... | 0/1 | 0/0 |

| ... | ... | ... | ... | ... | ... |

| (X, 152660491, .) | ./. | 0/0 | ... | 1/1 | 0/0 |

| (X, 153031688, .) | 0/0 | 0/0 | ... | 0/0 | 0/0 |

| (X, 153674876, .) | 0/0 | 0/0 | ... | 0/0 | 0/0 |

| (X, 153706320, .) | ./. | 0/0 | ... | 0/0 | 0/0 |

| (X, 154087368, .) | 0/0 | 1/1 | ... | 1/1 | 1/1 |

10879 rows x 284 columns

We can show the alleles too.

Note: this needs work to make it easier to do

df_variant = ds[[v for v in ds.data_vars if v.startswith("variant_")]].to_dataframe()

df_variant.groupby(["variant_contig_name", "variant_position", "variant_id"]).agg({"variant_allele": lambda x: list(x)}).head(5)

| variant_allele | |||

|---|---|---|---|

| variant_contig_name | variant_position | variant_id | |

| 1 | 904165 | . | [G, A] |

| 909917 | . | [G, A] | |

| 986963 | . | [C, T] | |

| 1563691 | . | [T, G] | |

| 1707740 | . | [T, G] |

Show the first five sample IDs by referencing the dataset variable directly:

ds.sample_id[:5].values

array(['HG00096', 'HG00099', 'HG00105', 'HG00118', 'HG00129'],

dtype=object)

Adding column fields#

Xarray datasets can have any number of variables added to them, possibly loaded from different sources. Next we’ll take a text file (CSV) containing annotations, and use it to annotate the samples in the dataset.

First we load the annotation data using regular Pandas.

ANNOTATIONS_FILE = "https://storage.googleapis.com/sgkit-gwas-tutorial/1kg_annotations.txt"

df = pd.read_csv(ANNOTATIONS_FILE, sep="\t", index_col="Sample")

df.info()

<class 'pandas.core.frame.DataFrame'>

Index: 3500 entries, HG00096 to NA21144

Data columns (total 5 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 Population 3500 non-null object

1 SuperPopulation 3500 non-null object

2 isFemale 3500 non-null bool

3 PurpleHair 3500 non-null bool

4 CaffeineConsumption 3500 non-null int64

dtypes: bool(2), int64(1), object(2)

memory usage: 116.2+ KB

df

| Population | SuperPopulation | isFemale | PurpleHair | CaffeineConsumption | |

|---|---|---|---|---|---|

| Sample | |||||

| HG00096 | GBR | EUR | False | False | 4 |

| HG00097 | GBR | EUR | True | True | 4 |

| HG00098 | GBR | EUR | False | False | 5 |

| HG00099 | GBR | EUR | True | False | 4 |

| HG00100 | GBR | EUR | True | False | 5 |

| ... | ... | ... | ... | ... | ... |

| NA21137 | GIH | SAS | True | False | 1 |

| NA21141 | GIH | SAS | True | True | 2 |

| NA21142 | GIH | SAS | True | True | 2 |

| NA21143 | GIH | SAS | True | True | 5 |

| NA21144 | GIH | SAS | True | False | 3 |

3500 rows × 5 columns

To join the annotation data with the genetic data, we convert it to Xarray, then do a join.

ds_annotations = pd.DataFrame.to_xarray(df).rename({"Sample":"samples"})

ds = ds.set_index({"samples": "sample_id"})

ds = ds.merge(ds_annotations, join="left")

ds = ds.reset_index("samples").reset_coords(drop=True)

ds

<xarray.Dataset> Size: 29MB

Dimensions: (samples: 284, variants: 10879, alleles: 2,

ploidy: 2, contigs: 84, filters: 1,

region_index_values: 33, region_index_fields: 6)

Dimensions without coordinates: samples, variants, alleles, ploidy, contigs,

filters, region_index_values,

region_index_fields

Data variables: (12/43)

call_AD (variants, samples, alleles) int8 6MB dask.array<chunksize=(1000, 284, 2), meta=np.ndarray>

call_DP (variants, samples) int8 3MB dask.array<chunksize=(1000, 284), meta=np.ndarray>

call_GQ (variants, samples) int8 3MB dask.array<chunksize=(1000, 284), meta=np.ndarray>

call_genotype (variants, samples, ploidy) int8 6MB dask.array<chunksize=(1000, 284, 2), meta=np.ndarray>

call_genotype_mask (variants, samples, ploidy) bool 6MB dask.array<chunksize=(1000, 284, 2), meta=np.ndarray>

call_genotype_phased (variants, samples) bool 3MB dask.array<chunksize=(1000, 284), meta=np.ndarray>

... ...

variant_contig_name (variants) object 87kB dask.array<chunksize=(1000,), meta=np.ndarray>

Population (samples) object 2kB 'GBR' 'GBR' ... 'GIH' 'GIH'

SuperPopulation (samples) object 2kB 'EUR' 'EUR' ... 'SAS' 'SAS'

isFemale (samples) bool 284B False True False ... False True

PurpleHair (samples) bool 284B False False False ... True True

CaffeineConsumption (samples) int64 2kB 4 4 4 3 6 2 4 ... 6 4 6 4 6 5 5

Attributes: (3)- samples: 284

- variants: 10879

- alleles: 2

- ploidy: 2

- contigs: 84

- filters: 1

- region_index_values: 33

- region_index_fields: 6

- call_AD(variants, samples, alleles)int8dask.array<chunksize=(1000, 284, 2), meta=np.ndarray>

- description :

Array Chunk Bytes 5.89 MiB 554.69 kiB Shape (10879, 284, 2) (1000, 284, 2) Dask graph 11 chunks in 2 graph layers Data type int8 numpy.ndarray - call_DP(variants, samples)int8dask.array<chunksize=(1000, 284), meta=np.ndarray>

- description :

Array Chunk Bytes 2.95 MiB 277.34 kiB Shape (10879, 284) (1000, 284) Dask graph 11 chunks in 2 graph layers Data type int8 numpy.ndarray - call_GQ(variants, samples)int8dask.array<chunksize=(1000, 284), meta=np.ndarray>

- description :

Array Chunk Bytes 2.95 MiB 277.34 kiB Shape (10879, 284) (1000, 284) Dask graph 11 chunks in 2 graph layers Data type int8 numpy.ndarray - call_genotype(variants, samples, ploidy)int8dask.array<chunksize=(1000, 284, 2), meta=np.ndarray>

- description :

Array Chunk Bytes 5.89 MiB 554.69 kiB Shape (10879, 284, 2) (1000, 284, 2) Dask graph 11 chunks in 2 graph layers Data type int8 numpy.ndarray - call_genotype_mask(variants, samples, ploidy)booldask.array<chunksize=(1000, 284, 2), meta=np.ndarray>

- description :

Array Chunk Bytes 5.89 MiB 554.69 kiB Shape (10879, 284, 2) (1000, 284, 2) Dask graph 11 chunks in 2 graph layers Data type bool numpy.ndarray - call_genotype_phased(variants, samples)booldask.array<chunksize=(1000, 284), meta=np.ndarray>

- description :

Array Chunk Bytes 2.95 MiB 277.34 kiB Shape (10879, 284) (1000, 284) Dask graph 11 chunks in 2 graph layers Data type bool numpy.ndarray - contig_id(contigs)objectdask.array<chunksize=(84,), meta=np.ndarray>

Array Chunk Bytes 672 B 672 B Shape (84,) (84,) Dask graph 1 chunks in 2 graph layers Data type object numpy.ndarray - contig_length(contigs)float64dask.array<chunksize=(84,), meta=np.ndarray>

Array Chunk Bytes 672 B 672 B Shape (84,) (84,) Dask graph 1 chunks in 2 graph layers Data type float64 numpy.ndarray - filter_description(filters)objectdask.array<chunksize=(1,), meta=np.ndarray>

Array Chunk Bytes 8 B 8 B Shape (1,) (1,) Dask graph 1 chunks in 2 graph layers Data type object numpy.ndarray - filter_id(filters)objectdask.array<chunksize=(1,), meta=np.ndarray>

Array Chunk Bytes 8 B 8 B Shape (1,) (1,) Dask graph 1 chunks in 2 graph layers Data type object numpy.ndarray - region_index(region_index_values, region_index_fields)int32dask.array<chunksize=(33, 6), meta=np.ndarray>

Array Chunk Bytes 792 B 792 B Shape (33, 6) (33, 6) Dask graph 1 chunks in 2 graph layers Data type int32 numpy.ndarray - variant_AC(variants)int16dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 21.25 kiB 1.95 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type int16 numpy.ndarray - variant_AF(variants)float32dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 42.50 kiB 3.91 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type float32 numpy.ndarray - variant_AN(variants)int16dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 21.25 kiB 1.95 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type int16 numpy.ndarray - variant_BaseQRankSum(variants)float32dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 42.50 kiB 3.91 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type float32 numpy.ndarray - variant_ClippingRankSum(variants)float32dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 42.50 kiB 3.91 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type float32 numpy.ndarray - variant_DP(variants)int32dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 42.50 kiB 3.91 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type int32 numpy.ndarray - variant_DS(variants)booldask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 10.62 kiB 0.98 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type bool numpy.ndarray - variant_FS(variants)float32dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 42.50 kiB 3.91 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type float32 numpy.ndarray - variant_HaplotypeScore(variants)float32dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 42.50 kiB 3.91 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type float32 numpy.ndarray - variant_InbreedingCoeff(variants)float32dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 42.50 kiB 3.91 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type float32 numpy.ndarray - variant_MLEAC(variants)int16dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 21.25 kiB 1.95 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type int16 numpy.ndarray - variant_MLEAF(variants)float32dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 42.50 kiB 3.91 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type float32 numpy.ndarray - variant_MQ(variants)float32dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 42.50 kiB 3.91 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type float32 numpy.ndarray - variant_MQ0(variants)int8dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 10.62 kiB 0.98 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type int8 numpy.ndarray - variant_MQRankSum(variants)float32dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 42.50 kiB 3.91 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type float32 numpy.ndarray - variant_QD(variants)float32dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 42.50 kiB 3.91 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type float32 numpy.ndarray - variant_ReadPosRankSum(variants)float32dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 42.50 kiB 3.91 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type float32 numpy.ndarray - variant_allele(variants, alleles)objectdask.array<chunksize=(1000, 2), meta=np.ndarray>

- description :

- List of the reference and alternate alleles

Array Chunk Bytes 169.98 kiB 15.62 kiB Shape (10879, 2) (1000, 2) Dask graph 11 chunks in 2 graph layers Data type object numpy.ndarray - variant_contig(variants)int8dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

- An identifier from the reference genome or an angle-bracketed ID string pointing to a contig in the assembly file

Array Chunk Bytes 10.62 kiB 0.98 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type int8 numpy.ndarray - variant_filter(variants, filters)booldask.array<chunksize=(1000, 1), meta=np.ndarray>

- description :

- Filter status of the variant

Array Chunk Bytes 10.62 kiB 0.98 kiB Shape (10879, 1) (1000, 1) Dask graph 11 chunks in 2 graph layers Data type bool numpy.ndarray - variant_id(variants)objectdask.array<chunksize=(1000,), meta=np.ndarray>

- description :

- List of unique identifiers where applicable

Array Chunk Bytes 84.99 kiB 7.81 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type object numpy.ndarray - variant_id_mask(variants)booldask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 10.62 kiB 0.98 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type bool numpy.ndarray - variant_length(variants)int8dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

- The length of the variant measured in bases

Array Chunk Bytes 10.62 kiB 0.98 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type int8 numpy.ndarray - variant_position(variants)int32dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

- The reference position

Array Chunk Bytes 42.50 kiB 3.91 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type int32 numpy.ndarray - variant_quality(variants)float32dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

- Phred-scaled quality score

Array Chunk Bytes 42.50 kiB 3.91 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type float32 numpy.ndarray - variant_set(variants)objectdask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 84.99 kiB 7.81 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type object numpy.ndarray - variant_contig_name(variants)objectdask.array<chunksize=(1000,), meta=np.ndarray>

Array Chunk Bytes 84.99 kiB 7.81 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 8 graph layers Data type object numpy.ndarray - Population(samples)object'GBR' 'GBR' 'GBR' ... 'GIH' 'GIH'

array(['GBR', 'GBR', 'GBR', 'GBR', 'GBR', 'GBR', 'FIN', 'FIN', 'GBR', 'GBR', 'GBR', 'FIN', 'FIN', 'FIN', 'FIN', 'FIN', 'CHS', 'CHS', 'CHS', 'CHS', 'CHS', 'CHS', 'CHS', 'CHS', 'CHS', 'CHS', 'CHS', 'CHS', 'CHS', 'CHS', 'CHS', 'CHS', 'PUR', 'CDX', 'CDX', 'PUR', 'PUR', 'PUR', 'PUR', 'PUR', 'PUR', 'PUR', 'CLM', 'CLM', 'CLM', 'GBR', 'CLM', 'PUR', 'CLM', 'CLM', 'CLM', 'IBS', 'PEL', 'IBS', 'IBS', 'IBS', 'IBS', 'IBS', 'GBR', 'CDX', 'CDX', 'CDX', 'CDX', 'CDX', 'CDX', 'KHV', 'KHV', 'KHV', 'KHV', 'KHV', 'ACB', 'PEL', 'PEL', 'PEL', 'PEL', 'ACB', 'KHV', 'ACB', 'KHV', 'KHV', 'KHV', 'KHV', 'KHV', 'KHV', 'CDX', 'CDX', 'CDX', 'IBS', 'IBS', 'IBS', 'CDX', 'PEL', 'PEL', 'ACB', 'PEL', 'CDX', 'CDX', 'CDX', 'CDX', 'CDX', 'CDX', 'CDX', 'CDX', 'CDX', 'ACB', 'GWD', 'GWD', 'ACB', 'ACB', 'KHV', 'GWD', 'GWD', 'ACB', 'GWD', 'PJL', 'GWD', 'PJL', 'PJL', 'PJL', 'PJL', 'PJL', 'GWD', 'GWD', 'GWD', 'PJL', 'GWD', 'GWD', 'GWD', 'GWD', 'GWD', 'GWD', 'ESN', 'ESN', 'BEB', 'GWD', 'MSL', 'MSL', 'ESN', 'ESN', 'ESN', 'MSL', 'PJL', 'GWD', 'GWD', 'GWD', 'ESN', 'ESN', 'ESN', 'ESN', 'MSL', 'MSL', 'MSL', 'MSL', 'MSL', 'PJL', 'PJL', 'ESN', 'MSL', 'MSL', 'BEB', 'BEB', 'BEB', 'PJL', 'STU', 'STU', 'STU', 'ITU', 'STU', 'STU', 'BEB', 'BEB', 'BEB', 'STU', 'ITU', 'STU', 'BEB', 'BEB', 'STU', 'ITU', 'ITU', 'ITU', 'ITU', 'ITU', 'STU', 'BEB', 'BEB', 'ITU', 'STU', 'STU', 'ITU', 'CEU', 'CEU', 'CEU', 'CEU', 'CEU', 'CEU', 'CEU', 'CEU', 'CEU', 'CEU', 'YRI', 'CHB', 'CHB', 'CHB', 'CHB', 'CHB', 'CHB', 'CHB', 'CHB', 'CHB', 'CHB', 'CHB', 'YRI', 'YRI', 'YRI', 'YRI', 'YRI', 'JPT', 'JPT', 'JPT', 'JPT', 'JPT', 'JPT', 'JPT', 'JPT', 'JPT', 'JPT', 'JPT', 'YRI', 'YRI', 'YRI', 'YRI', 'YRI', 'LWK', 'LWK', 'LWK', 'LWK', 'LWK', 'LWK', 'LWK', 'LWK', 'LWK', 'LWK', 'LWK', 'LWK', 'LWK', 'LWK', 'LWK', 'MXL', 'MXL', 'MXL', 'MXL', 'MXL', 'ASW', 'MXL', 'MXL', 'MXL', 'MXL', 'MXL', 'ASW', 'ASW', 'TSI', 'TSI', 'TSI', 'TSI', 'TSI', 'TSI', 'TSI', 'TSI', 'TSI', 'TSI', 'GIH', 'GIH', 'GIH', 'GIH', 'GIH', 'GIH', 'GIH', 'GIH', 'GIH', 'GIH', 'GIH', 'GIH', 'GIH'], dtype=object) - SuperPopulation(samples)object'EUR' 'EUR' 'EUR' ... 'SAS' 'SAS'

array(['EUR', 'EUR', 'EUR', 'EUR', 'EUR', 'EUR', 'EUR', 'EUR', 'EUR', 'EUR', 'EUR', 'EUR', 'EUR', 'EUR', 'EUR', 'EUR', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'AMR', 'EAS', 'EAS', 'AMR', 'AMR', 'AMR', 'AMR', 'AMR', 'AMR', 'AMR', 'AMR', 'AMR', 'AMR', 'EUR', 'AMR', 'AMR', 'AMR', 'AMR', 'AMR', 'EUR', 'AMR', 'EUR', 'EUR', 'EUR', 'EUR', 'EUR', 'EUR', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'AFR', 'AMR', 'AMR', 'AMR', 'AMR', 'AFR', 'EAS', 'AFR', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EUR', 'EUR', 'EUR', 'EAS', 'AMR', 'AMR', 'AFR', 'AMR', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'AFR', 'AFR', 'AFR', 'AFR', 'AFR', 'EAS', 'AFR', 'AFR', 'AFR', 'AFR', 'SAS', 'AFR', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'AFR', 'AFR', 'AFR', 'SAS', 'AFR', 'AFR', 'AFR', 'AFR', 'AFR', 'AFR', 'AFR', 'AFR', 'SAS', 'AFR', 'AFR', 'AFR', 'AFR', 'AFR', 'AFR', 'AFR', 'SAS', 'AFR', 'AFR', 'AFR', 'AFR', 'AFR', 'AFR', 'AFR', 'AFR', 'AFR', 'AFR', 'AFR', 'AFR', 'SAS', 'SAS', 'AFR', 'AFR', 'AFR', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'EUR', 'EUR', 'EUR', 'EUR', 'EUR', 'EUR', 'EUR', 'EUR', 'EUR', 'EUR', 'AFR', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'AFR', 'AFR', 'AFR', 'AFR', 'AFR', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'AFR', 'AFR', 'AFR', 'AFR', 'AFR', 'AFR', 'AFR', 'AFR', 'AFR', 'AFR', 'AFR', 'AFR', 'AFR', 'AFR', 'AFR', 'AFR', 'AFR', 'AFR', 'AFR', 'AFR', 'AMR', 'AMR', 'AMR', 'AMR', 'AMR', 'AFR', 'AMR', 'AMR', 'AMR', 'AMR', 'AMR', 'AFR', 'AFR', 'EUR', 'EUR', 'EUR', 'EUR', 'EUR', 'EUR', 'EUR', 'EUR', 'EUR', 'EUR', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS'], dtype=object) - isFemale(samples)boolFalse True False ... False True

array([False, True, False, True, False, False, True, False, False, True, False, False, True, True, False, False, False, False, True, False, False, True, False, True, False, False, False, True, True, True, False, True, True, True, False, True, True, False, False, True, False, True, False, True, True, False, True, True, False, False, True, True, True, False, True, False, False, True, True, True, True, True, True, True, True, True, False, True, True, True, True, True, True, False, False, True, False, True, True, True, False, False, True, False, True, True, True, False, True, False, False, False, True, True, True, False, False, False, False, False, False, False, False, False, True, True, False, True, True, False, False, True, True, True, False, True, False, True, True, False, False, True, False, False, False, False, True, True, True, True, False, True, False, False, True, False, True, True, False, False, False, False, True, True, True, True, True, True, False, True, True, True, False, True, False, True, True, False, True, True, False, True, False, True, False, True, True, False, False, False, False, True, False, True, True, False, True, True, True, True, True, True, False, True, False, True, True, False, False, False, False, True, False, False, True, False, True, False, False, True, False, True, False, True, False, True, True, True, False, True, True, False, False, False, True, False, True, False, False, True, True, True, False, False, False, False, False, True, False, False, True, True, True, False, True, False, True, True, False, True, False, True, True, True, False, False, True, False, False, True, False, True, False, True, False, False, True, True, False, False, False, True, False, True, True, True, False, True, True, False, True, False, False, True, True, True, True, True, False, False, False, False, False, True]) - PurpleHair(samples)boolFalse False False ... True True

array([False, False, False, False, False, True, True, False, False, False, True, False, True, True, True, False, False, False, False, True, True, True, True, True, True, False, True, True, True, False, True, True, False, True, False, False, False, False, True, False, True, True, True, False, True, False, True, False, False, False, False, True, False, False, True, False, False, True, True, False, True, False, False, False, False, False, True, True, False, False, True, True, True, False, False, True, False, True, True, True, True, True, True, False, True, True, False, True, True, True, True, True, True, True, False, False, False, True, False, True, True, False, False, False, False, True, False, True, False, False, True, True, True, True, True, True, True, False, True, False, False, False, True, True, False, False, True, True, True, True, True, False, False, True, True, False, False, False, False, False, True, False, False, False, False, True, False, False, False, False, False, False, False, False, False, True, False, False, True, True, True, False, True, False, True, True, True, True, True, True, False, False, False, False, False, False, True, True, True, True, True, False, False, True, False, True, True, False, False, True, False, False, True, True, True, True, True, True, False, True, True, False, False, False, False, True, True, False, True, True, False, True, True, False, False, True, False, True, True, True, False, False, False, False, False, False, True, False, False, False, True, False, False, True, False, True, True, False, False, False, False, False, True, False, False, True, False, True, True, True, False, False, False, False, True, False, False, True, True, False, True, True, False, False, False, False, True, True, True, True, True, True, True, False, False, True, False, False, True, True, True, False, True, True]) - CaffeineConsumption(samples)int644 4 4 3 6 2 4 2 ... 5 6 4 6 4 6 5 5

array([4, 4, 4, 3, 6, 2, 4, 2, 1, 2, 0, 5, 4, 5, 4, 3, 6, 5, 5, 7, 5, 5, 7, 5, 1, 5, 5, 5, 4, 4, 5, 5, 5, 6, 6, 4, 4, 6, 3, 3, 5, 4, 4, 5, 5, 4, 6, 5, 4, 4, 5, 6, 3, 7, 5, 5, 6, 3, 2, 5, 5, 4, 6, 5, 6, 4, 6, 7, 6, 7, 3, 5, 6, 5, 6, 4, 5, 4, 4, 5, 8, 3, 4, 4, 4, 7, 5, 4, 2, 6, 7, 6, 5, 3, 3, 4, 5, 5, 5, 5, 6, 4, 5, 7, 2, 3, 3, 2, 3, 6, 4, 2, 6, 5, 3, 4, 7, 6, 7, 6, 3, 4, 2, 2, 5, 6, 7, 8, 6, 2, 3, 2, 0, 5, 7, 5, 1, 4, 3, 2, 4, 6, 5, 4, 4, 1, 5, 5, 3, 1, 1, 4, 3, 2, 4, 2, 1, 3, 3, 4, 4, 5, 6, 5, 4, 5, 0, 4, 5, 4, 3, 3, 4, 4, 3, 5, 6, 5, 3, 5, 4, 4, 6, 3, 5, 5, 4, 5, 3, 5, 4, 6, 5, 7, 5, 6, 6, 4, 4, 5, 3, 5, 6, 5, 4, 3, 8, 2, 4, 4, 6, 8, 4, 3, 4, 3, 2, 5, 6, 6, 4, 3, 5, 7, 4, 2, 5, 5, 6, 3, 2, 4, 4, 6, 5, 6, 5, 7, 2, 4, 2, 1, 5, 3, 5, 3, 5, 2, 4, 9, 6, 4, 3, 4, 4, 6, 6, 7, 6, 6, 3, 4, 3, 6, 6, 3, 4, 4, 2, 4, 6, 7, 4, 5, 4, 5, 5, 6, 4, 6, 4, 6, 5, 5])

- source :

- bio2zarr-0.1.6

- vcf_meta_information :

- [['fileformat', 'VCFv4.2'], ['hailversion', '0.2-29fbaeaf265e']]

- vcf_zarr_version :

- 0.4

Query functions#

We can look at some statistics of the data by converting the relevant dataset variable to a Pandas series, then using its built-in summary functions. Annotation data is usually small enough to load into memory, which is why it’s OK using Pandas here.

Here’s the population distribution by continent:

ds_annotations.SuperPopulation.to_series().value_counts()

SuperPopulation

AFR 1018

EUR 669

SAS 661

EAS 617

AMR 535

Name: count, dtype: int64

The distribution of the CaffeineConsumption variable:

ds_annotations.CaffeineConsumption.to_series().describe()

count 3500.000000

mean 3.983714

std 1.702349

min -1.000000

25% 3.000000

50% 4.000000

75% 5.000000

max 10.000000

Name: CaffeineConsumption, dtype: float64

There are far fewer samples in our dataset than the full 1000 genomes dataset, as we can see from the following queries.

len(ds_annotations.samples)

3500

len(ds.samples)

284

ds.SuperPopulation.to_series().value_counts()

SuperPopulation

AFR 76

EAS 72

SAS 55

EUR 47

AMR 34

Name: count, dtype: int64

ds.CaffeineConsumption.to_series().describe()

count 284.000000

mean 4.415493

std 1.580549

min 0.000000

25% 3.000000

50% 4.000000

75% 5.000000

max 9.000000

Name: CaffeineConsumption, dtype: float64

Here’s an example of doing an ad hoc query to uncover a biological insight from the data: calculate the counts of each of the 12 possible unique SNPs (4 choices for the reference base * 3 choices for the alternate base).

df_variant.groupby(["variant_contig_name", "variant_position", "variant_id"])["variant_allele"].apply(tuple).value_counts()

variant_allele

(C, T) 2418

(G, A) 2367

(A, G) 1929

(T, C) 1864

(C, A) 494

(G, T) 477

(T, G) 466

(A, C) 451

(C, G) 150

(G, C) 111

(T, A) 77

(A, T) 75

Name: count, dtype: int64

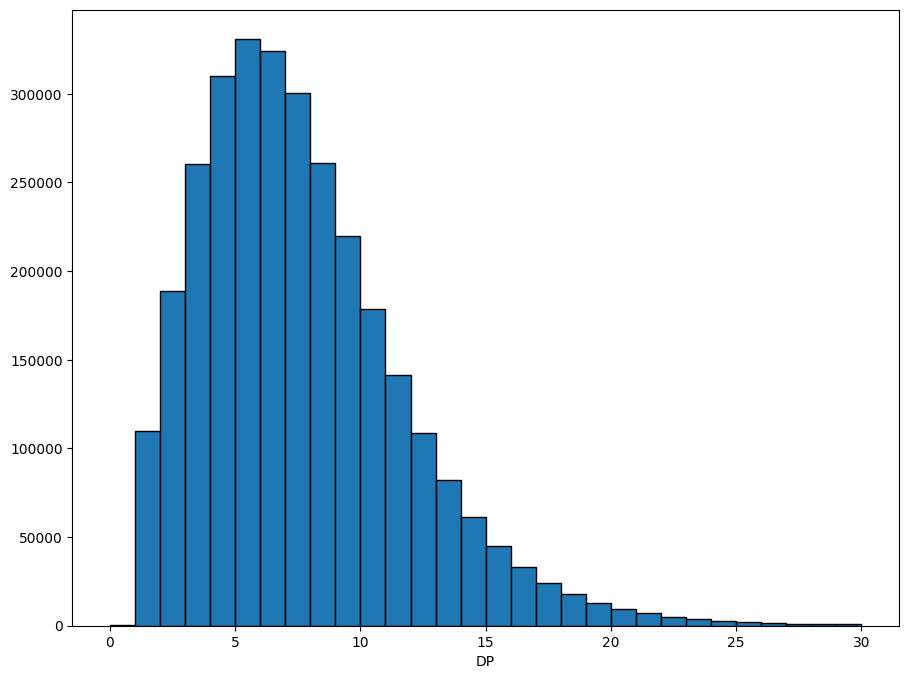

Often we want to plot the data, to get a feel for how it’s distributed. Xarray has some convenience functions for plotting, which we use here to show the distribution of the DP field.

dp = ds.call_DP.where(ds.call_DP >= 0) # filter out missing

dp.attrs["long_name"] = "DP"

xr.plot.hist(dp, range=(0, 30), bins=30, size=8, edgecolor="black");

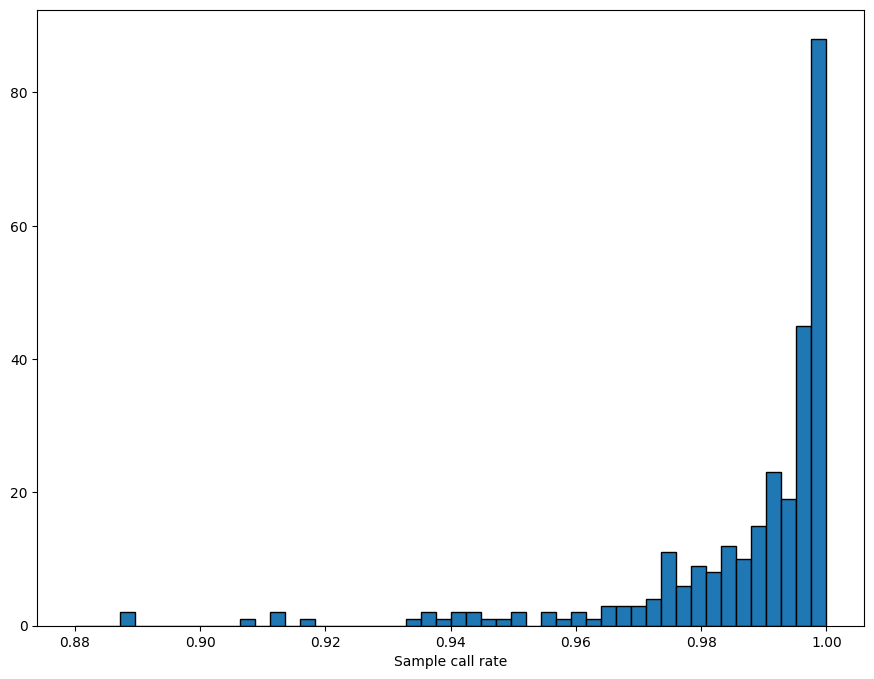

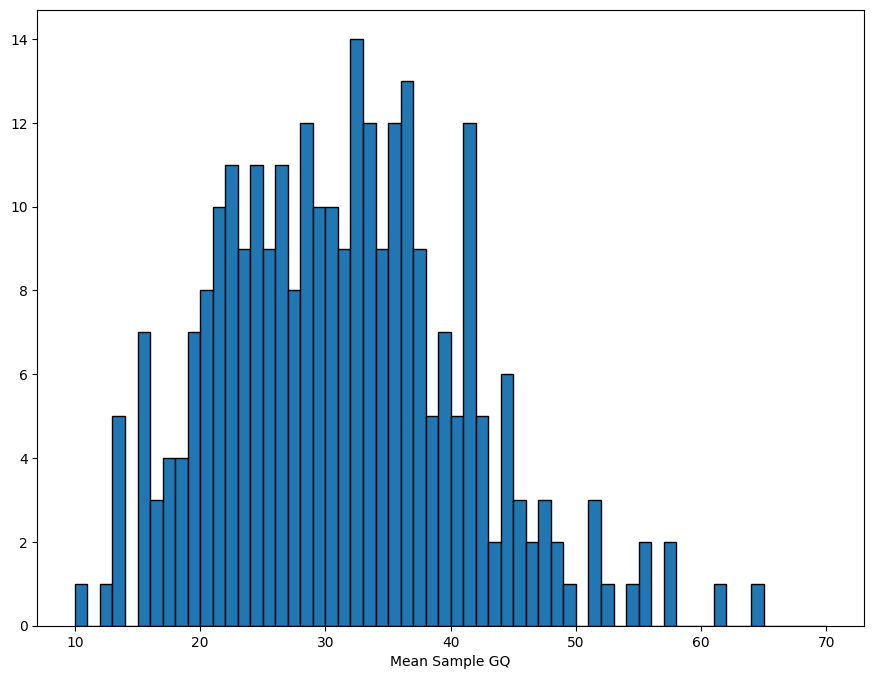

Quality control#

QC is the process of filtering out poor quality data before running an analysis. This is usually an iterative process.

The sample_stats function in sgkit computes a collection of useful metrics for each sample and stores them in new variables. (The Hail equivalent is sample_qc.)

Here’s the dataset before running sample_stats.

ds

<xarray.Dataset> Size: 29MB

Dimensions: (samples: 284, variants: 10879, alleles: 2,

ploidy: 2, contigs: 84, filters: 1,

region_index_values: 33, region_index_fields: 6)

Dimensions without coordinates: samples, variants, alleles, ploidy, contigs,

filters, region_index_values,

region_index_fields

Data variables: (12/43)

call_AD (variants, samples, alleles) int8 6MB dask.array<chunksize=(1000, 284, 2), meta=np.ndarray>

call_DP (variants, samples) int8 3MB dask.array<chunksize=(1000, 284), meta=np.ndarray>

call_GQ (variants, samples) int8 3MB dask.array<chunksize=(1000, 284), meta=np.ndarray>

call_genotype (variants, samples, ploidy) int8 6MB dask.array<chunksize=(1000, 284, 2), meta=np.ndarray>

call_genotype_mask (variants, samples, ploidy) bool 6MB dask.array<chunksize=(1000, 284, 2), meta=np.ndarray>

call_genotype_phased (variants, samples) bool 3MB dask.array<chunksize=(1000, 284), meta=np.ndarray>

... ...

variant_contig_name (variants) object 87kB dask.array<chunksize=(1000,), meta=np.ndarray>

Population (samples) object 2kB 'GBR' 'GBR' ... 'GIH' 'GIH'

SuperPopulation (samples) object 2kB 'EUR' 'EUR' ... 'SAS' 'SAS'

isFemale (samples) bool 284B False True False ... False True

PurpleHair (samples) bool 284B False False False ... True True

CaffeineConsumption (samples) int64 2kB 4 4 4 3 6 2 4 ... 6 4 6 4 6 5 5

Attributes: (3)- samples: 284

- variants: 10879

- alleles: 2

- ploidy: 2

- contigs: 84

- filters: 1

- region_index_values: 33

- region_index_fields: 6

- call_AD(variants, samples, alleles)int8dask.array<chunksize=(1000, 284, 2), meta=np.ndarray>

- description :

Array Chunk Bytes 5.89 MiB 554.69 kiB Shape (10879, 284, 2) (1000, 284, 2) Dask graph 11 chunks in 2 graph layers Data type int8 numpy.ndarray - call_DP(variants, samples)int8dask.array<chunksize=(1000, 284), meta=np.ndarray>

- description :

Array Chunk Bytes 2.95 MiB 277.34 kiB Shape (10879, 284) (1000, 284) Dask graph 11 chunks in 2 graph layers Data type int8 numpy.ndarray - call_GQ(variants, samples)int8dask.array<chunksize=(1000, 284), meta=np.ndarray>

- description :

Array Chunk Bytes 2.95 MiB 277.34 kiB Shape (10879, 284) (1000, 284) Dask graph 11 chunks in 2 graph layers Data type int8 numpy.ndarray - call_genotype(variants, samples, ploidy)int8dask.array<chunksize=(1000, 284, 2), meta=np.ndarray>

- description :

Array Chunk Bytes 5.89 MiB 554.69 kiB Shape (10879, 284, 2) (1000, 284, 2) Dask graph 11 chunks in 2 graph layers Data type int8 numpy.ndarray - call_genotype_mask(variants, samples, ploidy)booldask.array<chunksize=(1000, 284, 2), meta=np.ndarray>

- description :

Array Chunk Bytes 5.89 MiB 554.69 kiB Shape (10879, 284, 2) (1000, 284, 2) Dask graph 11 chunks in 2 graph layers Data type bool numpy.ndarray - call_genotype_phased(variants, samples)booldask.array<chunksize=(1000, 284), meta=np.ndarray>

- description :

Array Chunk Bytes 2.95 MiB 277.34 kiB Shape (10879, 284) (1000, 284) Dask graph 11 chunks in 2 graph layers Data type bool numpy.ndarray - contig_id(contigs)objectdask.array<chunksize=(84,), meta=np.ndarray>

Array Chunk Bytes 672 B 672 B Shape (84,) (84,) Dask graph 1 chunks in 2 graph layers Data type object numpy.ndarray - contig_length(contigs)float64dask.array<chunksize=(84,), meta=np.ndarray>

Array Chunk Bytes 672 B 672 B Shape (84,) (84,) Dask graph 1 chunks in 2 graph layers Data type float64 numpy.ndarray - filter_description(filters)objectdask.array<chunksize=(1,), meta=np.ndarray>

Array Chunk Bytes 8 B 8 B Shape (1,) (1,) Dask graph 1 chunks in 2 graph layers Data type object numpy.ndarray - filter_id(filters)objectdask.array<chunksize=(1,), meta=np.ndarray>

Array Chunk Bytes 8 B 8 B Shape (1,) (1,) Dask graph 1 chunks in 2 graph layers Data type object numpy.ndarray - region_index(region_index_values, region_index_fields)int32dask.array<chunksize=(33, 6), meta=np.ndarray>

Array Chunk Bytes 792 B 792 B Shape (33, 6) (33, 6) Dask graph 1 chunks in 2 graph layers Data type int32 numpy.ndarray - variant_AC(variants)int16dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 21.25 kiB 1.95 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type int16 numpy.ndarray - variant_AF(variants)float32dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 42.50 kiB 3.91 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type float32 numpy.ndarray - variant_AN(variants)int16dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 21.25 kiB 1.95 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type int16 numpy.ndarray - variant_BaseQRankSum(variants)float32dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 42.50 kiB 3.91 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type float32 numpy.ndarray - variant_ClippingRankSum(variants)float32dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 42.50 kiB 3.91 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type float32 numpy.ndarray - variant_DP(variants)int32dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 42.50 kiB 3.91 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type int32 numpy.ndarray - variant_DS(variants)booldask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 10.62 kiB 0.98 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type bool numpy.ndarray - variant_FS(variants)float32dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 42.50 kiB 3.91 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type float32 numpy.ndarray - variant_HaplotypeScore(variants)float32dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 42.50 kiB 3.91 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type float32 numpy.ndarray - variant_InbreedingCoeff(variants)float32dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 42.50 kiB 3.91 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type float32 numpy.ndarray - variant_MLEAC(variants)int16dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 21.25 kiB 1.95 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type int16 numpy.ndarray - variant_MLEAF(variants)float32dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 42.50 kiB 3.91 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type float32 numpy.ndarray - variant_MQ(variants)float32dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 42.50 kiB 3.91 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type float32 numpy.ndarray - variant_MQ0(variants)int8dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 10.62 kiB 0.98 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type int8 numpy.ndarray - variant_MQRankSum(variants)float32dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 42.50 kiB 3.91 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type float32 numpy.ndarray - variant_QD(variants)float32dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 42.50 kiB 3.91 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type float32 numpy.ndarray - variant_ReadPosRankSum(variants)float32dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 42.50 kiB 3.91 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type float32 numpy.ndarray - variant_allele(variants, alleles)objectdask.array<chunksize=(1000, 2), meta=np.ndarray>

- description :

- List of the reference and alternate alleles

Array Chunk Bytes 169.98 kiB 15.62 kiB Shape (10879, 2) (1000, 2) Dask graph 11 chunks in 2 graph layers Data type object numpy.ndarray - variant_contig(variants)int8dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

- An identifier from the reference genome or an angle-bracketed ID string pointing to a contig in the assembly file

Array Chunk Bytes 10.62 kiB 0.98 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type int8 numpy.ndarray - variant_filter(variants, filters)booldask.array<chunksize=(1000, 1), meta=np.ndarray>

- description :

- Filter status of the variant

Array Chunk Bytes 10.62 kiB 0.98 kiB Shape (10879, 1) (1000, 1) Dask graph 11 chunks in 2 graph layers Data type bool numpy.ndarray - variant_id(variants)objectdask.array<chunksize=(1000,), meta=np.ndarray>

- description :

- List of unique identifiers where applicable

Array Chunk Bytes 84.99 kiB 7.81 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type object numpy.ndarray - variant_id_mask(variants)booldask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 10.62 kiB 0.98 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type bool numpy.ndarray - variant_length(variants)int8dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

- The length of the variant measured in bases

Array Chunk Bytes 10.62 kiB 0.98 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type int8 numpy.ndarray - variant_position(variants)int32dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

- The reference position

Array Chunk Bytes 42.50 kiB 3.91 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type int32 numpy.ndarray - variant_quality(variants)float32dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

- Phred-scaled quality score

Array Chunk Bytes 42.50 kiB 3.91 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type float32 numpy.ndarray - variant_set(variants)objectdask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 84.99 kiB 7.81 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type object numpy.ndarray - variant_contig_name(variants)objectdask.array<chunksize=(1000,), meta=np.ndarray>

Array Chunk Bytes 84.99 kiB 7.81 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 8 graph layers Data type object numpy.ndarray - Population(samples)object'GBR' 'GBR' 'GBR' ... 'GIH' 'GIH'

array(['GBR', 'GBR', 'GBR', 'GBR', 'GBR', 'GBR', 'FIN', 'FIN', 'GBR', 'GBR', 'GBR', 'FIN', 'FIN', 'FIN', 'FIN', 'FIN', 'CHS', 'CHS', 'CHS', 'CHS', 'CHS', 'CHS', 'CHS', 'CHS', 'CHS', 'CHS', 'CHS', 'CHS', 'CHS', 'CHS', 'CHS', 'CHS', 'PUR', 'CDX', 'CDX', 'PUR', 'PUR', 'PUR', 'PUR', 'PUR', 'PUR', 'PUR', 'CLM', 'CLM', 'CLM', 'GBR', 'CLM', 'PUR', 'CLM', 'CLM', 'CLM', 'IBS', 'PEL', 'IBS', 'IBS', 'IBS', 'IBS', 'IBS', 'GBR', 'CDX', 'CDX', 'CDX', 'CDX', 'CDX', 'CDX', 'KHV', 'KHV', 'KHV', 'KHV', 'KHV', 'ACB', 'PEL', 'PEL', 'PEL', 'PEL', 'ACB', 'KHV', 'ACB', 'KHV', 'KHV', 'KHV', 'KHV', 'KHV', 'KHV', 'CDX', 'CDX', 'CDX', 'IBS', 'IBS', 'IBS', 'CDX', 'PEL', 'PEL', 'ACB', 'PEL', 'CDX', 'CDX', 'CDX', 'CDX', 'CDX', 'CDX', 'CDX', 'CDX', 'CDX', 'ACB', 'GWD', 'GWD', 'ACB', 'ACB', 'KHV', 'GWD', 'GWD', 'ACB', 'GWD', 'PJL', 'GWD', 'PJL', 'PJL', 'PJL', 'PJL', 'PJL', 'GWD', 'GWD', 'GWD', 'PJL', 'GWD', 'GWD', 'GWD', 'GWD', 'GWD', 'GWD', 'ESN', 'ESN', 'BEB', 'GWD', 'MSL', 'MSL', 'ESN', 'ESN', 'ESN', 'MSL', 'PJL', 'GWD', 'GWD', 'GWD', 'ESN', 'ESN', 'ESN', 'ESN', 'MSL', 'MSL', 'MSL', 'MSL', 'MSL', 'PJL', 'PJL', 'ESN', 'MSL', 'MSL', 'BEB', 'BEB', 'BEB', 'PJL', 'STU', 'STU', 'STU', 'ITU', 'STU', 'STU', 'BEB', 'BEB', 'BEB', 'STU', 'ITU', 'STU', 'BEB', 'BEB', 'STU', 'ITU', 'ITU', 'ITU', 'ITU', 'ITU', 'STU', 'BEB', 'BEB', 'ITU', 'STU', 'STU', 'ITU', 'CEU', 'CEU', 'CEU', 'CEU', 'CEU', 'CEU', 'CEU', 'CEU', 'CEU', 'CEU', 'YRI', 'CHB', 'CHB', 'CHB', 'CHB', 'CHB', 'CHB', 'CHB', 'CHB', 'CHB', 'CHB', 'CHB', 'YRI', 'YRI', 'YRI', 'YRI', 'YRI', 'JPT', 'JPT', 'JPT', 'JPT', 'JPT', 'JPT', 'JPT', 'JPT', 'JPT', 'JPT', 'JPT', 'YRI', 'YRI', 'YRI', 'YRI', 'YRI', 'LWK', 'LWK', 'LWK', 'LWK', 'LWK', 'LWK', 'LWK', 'LWK', 'LWK', 'LWK', 'LWK', 'LWK', 'LWK', 'LWK', 'LWK', 'MXL', 'MXL', 'MXL', 'MXL', 'MXL', 'ASW', 'MXL', 'MXL', 'MXL', 'MXL', 'MXL', 'ASW', 'ASW', 'TSI', 'TSI', 'TSI', 'TSI', 'TSI', 'TSI', 'TSI', 'TSI', 'TSI', 'TSI', 'GIH', 'GIH', 'GIH', 'GIH', 'GIH', 'GIH', 'GIH', 'GIH', 'GIH', 'GIH', 'GIH', 'GIH', 'GIH'], dtype=object) - SuperPopulation(samples)object'EUR' 'EUR' 'EUR' ... 'SAS' 'SAS'

array(['EUR', 'EUR', 'EUR', 'EUR', 'EUR', 'EUR', 'EUR', 'EUR', 'EUR', 'EUR', 'EUR', 'EUR', 'EUR', 'EUR', 'EUR', 'EUR', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'AMR', 'EAS', 'EAS', 'AMR', 'AMR', 'AMR', 'AMR', 'AMR', 'AMR', 'AMR', 'AMR', 'AMR', 'AMR', 'EUR', 'AMR', 'AMR', 'AMR', 'AMR', 'AMR', 'EUR', 'AMR', 'EUR', 'EUR', 'EUR', 'EUR', 'EUR', 'EUR', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'AFR', 'AMR', 'AMR', 'AMR', 'AMR', 'AFR', 'EAS', 'AFR', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EUR', 'EUR', 'EUR', 'EAS', 'AMR', 'AMR', 'AFR', 'AMR', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'AFR', 'AFR', 'AFR', 'AFR', 'AFR', 'EAS', 'AFR', 'AFR', 'AFR', 'AFR', 'SAS', 'AFR', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'AFR', 'AFR', 'AFR', 'SAS', 'AFR', 'AFR', 'AFR', 'AFR', 'AFR', 'AFR', 'AFR', 'AFR', 'SAS', 'AFR', 'AFR', 'AFR', 'AFR', 'AFR', 'AFR', 'AFR', 'SAS', 'AFR', 'AFR', 'AFR', 'AFR', 'AFR', 'AFR', 'AFR', 'AFR', 'AFR', 'AFR', 'AFR', 'AFR', 'SAS', 'SAS', 'AFR', 'AFR', 'AFR', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'EUR', 'EUR', 'EUR', 'EUR', 'EUR', 'EUR', 'EUR', 'EUR', 'EUR', 'EUR', 'AFR', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'AFR', 'AFR', 'AFR', 'AFR', 'AFR', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'EAS', 'AFR', 'AFR', 'AFR', 'AFR', 'AFR', 'AFR', 'AFR', 'AFR', 'AFR', 'AFR', 'AFR', 'AFR', 'AFR', 'AFR', 'AFR', 'AFR', 'AFR', 'AFR', 'AFR', 'AFR', 'AMR', 'AMR', 'AMR', 'AMR', 'AMR', 'AFR', 'AMR', 'AMR', 'AMR', 'AMR', 'AMR', 'AFR', 'AFR', 'EUR', 'EUR', 'EUR', 'EUR', 'EUR', 'EUR', 'EUR', 'EUR', 'EUR', 'EUR', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS', 'SAS'], dtype=object) - isFemale(samples)boolFalse True False ... False True

array([False, True, False, True, False, False, True, False, False, True, False, False, True, True, False, False, False, False, True, False, False, True, False, True, False, False, False, True, True, True, False, True, True, True, False, True, True, False, False, True, False, True, False, True, True, False, True, True, False, False, True, True, True, False, True, False, False, True, True, True, True, True, True, True, True, True, False, True, True, True, True, True, True, False, False, True, False, True, True, True, False, False, True, False, True, True, True, False, True, False, False, False, True, True, True, False, False, False, False, False, False, False, False, False, True, True, False, True, True, False, False, True, True, True, False, True, False, True, True, False, False, True, False, False, False, False, True, True, True, True, False, True, False, False, True, False, True, True, False, False, False, False, True, True, True, True, True, True, False, True, True, True, False, True, False, True, True, False, True, True, False, True, False, True, False, True, True, False, False, False, False, True, False, True, True, False, True, True, True, True, True, True, False, True, False, True, True, False, False, False, False, True, False, False, True, False, True, False, False, True, False, True, False, True, False, True, True, True, False, True, True, False, False, False, True, False, True, False, False, True, True, True, False, False, False, False, False, True, False, False, True, True, True, False, True, False, True, True, False, True, False, True, True, True, False, False, True, False, False, True, False, True, False, True, False, False, True, True, False, False, False, True, False, True, True, True, False, True, True, False, True, False, False, True, True, True, True, True, False, False, False, False, False, True]) - PurpleHair(samples)boolFalse False False ... True True

array([False, False, False, False, False, True, True, False, False, False, True, False, True, True, True, False, False, False, False, True, True, True, True, True, True, False, True, True, True, False, True, True, False, True, False, False, False, False, True, False, True, True, True, False, True, False, True, False, False, False, False, True, False, False, True, False, False, True, True, False, True, False, False, False, False, False, True, True, False, False, True, True, True, False, False, True, False, True, True, True, True, True, True, False, True, True, False, True, True, True, True, True, True, True, False, False, False, True, False, True, True, False, False, False, False, True, False, True, False, False, True, True, True, True, True, True, True, False, True, False, False, False, True, True, False, False, True, True, True, True, True, False, False, True, True, False, False, False, False, False, True, False, False, False, False, True, False, False, False, False, False, False, False, False, False, True, False, False, True, True, True, False, True, False, True, True, True, True, True, True, False, False, False, False, False, False, True, True, True, True, True, False, False, True, False, True, True, False, False, True, False, False, True, True, True, True, True, True, False, True, True, False, False, False, False, True, True, False, True, True, False, True, True, False, False, True, False, True, True, True, False, False, False, False, False, False, True, False, False, False, True, False, False, True, False, True, True, False, False, False, False, False, True, False, False, True, False, True, True, True, False, False, False, False, True, False, False, True, True, False, True, True, False, False, False, False, True, True, True, True, True, True, True, False, False, True, False, False, True, True, True, False, True, True]) - CaffeineConsumption(samples)int644 4 4 3 6 2 4 2 ... 5 6 4 6 4 6 5 5

array([4, 4, 4, 3, 6, 2, 4, 2, 1, 2, 0, 5, 4, 5, 4, 3, 6, 5, 5, 7, 5, 5, 7, 5, 1, 5, 5, 5, 4, 4, 5, 5, 5, 6, 6, 4, 4, 6, 3, 3, 5, 4, 4, 5, 5, 4, 6, 5, 4, 4, 5, 6, 3, 7, 5, 5, 6, 3, 2, 5, 5, 4, 6, 5, 6, 4, 6, 7, 6, 7, 3, 5, 6, 5, 6, 4, 5, 4, 4, 5, 8, 3, 4, 4, 4, 7, 5, 4, 2, 6, 7, 6, 5, 3, 3, 4, 5, 5, 5, 5, 6, 4, 5, 7, 2, 3, 3, 2, 3, 6, 4, 2, 6, 5, 3, 4, 7, 6, 7, 6, 3, 4, 2, 2, 5, 6, 7, 8, 6, 2, 3, 2, 0, 5, 7, 5, 1, 4, 3, 2, 4, 6, 5, 4, 4, 1, 5, 5, 3, 1, 1, 4, 3, 2, 4, 2, 1, 3, 3, 4, 4, 5, 6, 5, 4, 5, 0, 4, 5, 4, 3, 3, 4, 4, 3, 5, 6, 5, 3, 5, 4, 4, 6, 3, 5, 5, 4, 5, 3, 5, 4, 6, 5, 7, 5, 6, 6, 4, 4, 5, 3, 5, 6, 5, 4, 3, 8, 2, 4, 4, 6, 8, 4, 3, 4, 3, 2, 5, 6, 6, 4, 3, 5, 7, 4, 2, 5, 5, 6, 3, 2, 4, 4, 6, 5, 6, 5, 7, 2, 4, 2, 1, 5, 3, 5, 3, 5, 2, 4, 9, 6, 4, 3, 4, 4, 6, 6, 7, 6, 6, 3, 4, 3, 6, 6, 3, 4, 4, 2, 4, 6, 7, 4, 5, 4, 5, 5, 6, 4, 6, 4, 6, 5, 5])

- source :

- bio2zarr-0.1.6

- vcf_meta_information :

- [['fileformat', 'VCFv4.2'], ['hailversion', '0.2-29fbaeaf265e']]

- vcf_zarr_version :

- 0.4

We can see the new variables (with names beginning sample_) after we run sample_stats:

ds = sg.sample_stats(ds)

ds

<xarray.Dataset> Size: 29MB

Dimensions: (samples: 284, variants: 10879, alleles: 2,

ploidy: 2, contigs: 84, filters: 1,

region_index_values: 33, region_index_fields: 6)

Dimensions without coordinates: samples, variants, alleles, ploidy, contigs,

filters, region_index_values,

region_index_fields

Data variables: (12/49)

sample_n_called (samples) int64 2kB dask.array<chunksize=(284,), meta=np.ndarray>

sample_call_rate (samples) float64 2kB dask.array<chunksize=(284,), meta=np.ndarray>

sample_n_het (samples) int64 2kB dask.array<chunksize=(284,), meta=np.ndarray>

sample_n_hom_ref (samples) int64 2kB dask.array<chunksize=(284,), meta=np.ndarray>

sample_n_hom_alt (samples) int64 2kB dask.array<chunksize=(284,), meta=np.ndarray>

sample_n_non_ref (samples) int64 2kB dask.array<chunksize=(284,), meta=np.ndarray>

... ...

variant_contig_name (variants) object 87kB dask.array<chunksize=(1000,), meta=np.ndarray>

Population (samples) object 2kB 'GBR' 'GBR' ... 'GIH' 'GIH'

SuperPopulation (samples) object 2kB 'EUR' 'EUR' ... 'SAS' 'SAS'

isFemale (samples) bool 284B False True False ... False True

PurpleHair (samples) bool 284B False False False ... True True

CaffeineConsumption (samples) int64 2kB 4 4 4 3 6 2 4 ... 6 4 6 4 6 5 5

Attributes: (3)- samples: 284

- variants: 10879

- alleles: 2

- ploidy: 2

- contigs: 84

- filters: 1

- region_index_values: 33

- region_index_fields: 6

- sample_n_called(samples)int64dask.array<chunksize=(284,), meta=np.ndarray>

Array Chunk Bytes 2.22 kiB 2.22 kiB Shape (284,) (284,) Dask graph 1 chunks in 9 graph layers Data type int64 numpy.ndarray - sample_call_rate(samples)float64dask.array<chunksize=(284,), meta=np.ndarray>

Array Chunk Bytes 2.22 kiB 2.22 kiB Shape (284,) (284,) Dask graph 1 chunks in 11 graph layers Data type float64 numpy.ndarray - sample_n_het(samples)int64dask.array<chunksize=(284,), meta=np.ndarray>

Array Chunk Bytes 2.22 kiB 2.22 kiB Shape (284,) (284,) Dask graph 1 chunks in 8 graph layers Data type int64 numpy.ndarray - sample_n_hom_ref(samples)int64dask.array<chunksize=(284,), meta=np.ndarray>

Array Chunk Bytes 2.22 kiB 2.22 kiB Shape (284,) (284,) Dask graph 1 chunks in 8 graph layers Data type int64 numpy.ndarray - sample_n_hom_alt(samples)int64dask.array<chunksize=(284,), meta=np.ndarray>

Array Chunk Bytes 2.22 kiB 2.22 kiB Shape (284,) (284,) Dask graph 1 chunks in 8 graph layers Data type int64 numpy.ndarray - sample_n_non_ref(samples)int64dask.array<chunksize=(284,), meta=np.ndarray>

Array Chunk Bytes 2.22 kiB 2.22 kiB Shape (284,) (284,) Dask graph 1 chunks in 11 graph layers Data type int64 numpy.ndarray - call_AD(variants, samples, alleles)int8dask.array<chunksize=(1000, 284, 2), meta=np.ndarray>

- description :

Array Chunk Bytes 5.89 MiB 554.69 kiB Shape (10879, 284, 2) (1000, 284, 2) Dask graph 11 chunks in 2 graph layers Data type int8 numpy.ndarray - call_DP(variants, samples)int8dask.array<chunksize=(1000, 284), meta=np.ndarray>

- description :

Array Chunk Bytes 2.95 MiB 277.34 kiB Shape (10879, 284) (1000, 284) Dask graph 11 chunks in 2 graph layers Data type int8 numpy.ndarray - call_GQ(variants, samples)int8dask.array<chunksize=(1000, 284), meta=np.ndarray>

- description :

Array Chunk Bytes 2.95 MiB 277.34 kiB Shape (10879, 284) (1000, 284) Dask graph 11 chunks in 2 graph layers Data type int8 numpy.ndarray - call_genotype(variants, samples, ploidy)int8dask.array<chunksize=(1000, 284, 2), meta=np.ndarray>

- description :

Array Chunk Bytes 5.89 MiB 554.69 kiB Shape (10879, 284, 2) (1000, 284, 2) Dask graph 11 chunks in 2 graph layers Data type int8 numpy.ndarray - call_genotype_mask(variants, samples, ploidy)booldask.array<chunksize=(1000, 284, 2), meta=np.ndarray>

- description :

Array Chunk Bytes 5.89 MiB 554.69 kiB Shape (10879, 284, 2) (1000, 284, 2) Dask graph 11 chunks in 2 graph layers Data type bool numpy.ndarray - call_genotype_phased(variants, samples)booldask.array<chunksize=(1000, 284), meta=np.ndarray>

- description :

Array Chunk Bytes 2.95 MiB 277.34 kiB Shape (10879, 284) (1000, 284) Dask graph 11 chunks in 2 graph layers Data type bool numpy.ndarray - contig_id(contigs)objectdask.array<chunksize=(84,), meta=np.ndarray>

Array Chunk Bytes 672 B 672 B Shape (84,) (84,) Dask graph 1 chunks in 2 graph layers Data type object numpy.ndarray - contig_length(contigs)float64dask.array<chunksize=(84,), meta=np.ndarray>

Array Chunk Bytes 672 B 672 B Shape (84,) (84,) Dask graph 1 chunks in 2 graph layers Data type float64 numpy.ndarray - filter_description(filters)objectdask.array<chunksize=(1,), meta=np.ndarray>

Array Chunk Bytes 8 B 8 B Shape (1,) (1,) Dask graph 1 chunks in 2 graph layers Data type object numpy.ndarray - filter_id(filters)objectdask.array<chunksize=(1,), meta=np.ndarray>

Array Chunk Bytes 8 B 8 B Shape (1,) (1,) Dask graph 1 chunks in 2 graph layers Data type object numpy.ndarray - region_index(region_index_values, region_index_fields)int32dask.array<chunksize=(33, 6), meta=np.ndarray>

Array Chunk Bytes 792 B 792 B Shape (33, 6) (33, 6) Dask graph 1 chunks in 2 graph layers Data type int32 numpy.ndarray - variant_AC(variants)int16dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 21.25 kiB 1.95 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type int16 numpy.ndarray - variant_AF(variants)float32dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 42.50 kiB 3.91 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type float32 numpy.ndarray - variant_AN(variants)int16dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 21.25 kiB 1.95 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type int16 numpy.ndarray - variant_BaseQRankSum(variants)float32dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 42.50 kiB 3.91 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type float32 numpy.ndarray - variant_ClippingRankSum(variants)float32dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 42.50 kiB 3.91 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type float32 numpy.ndarray - variant_DP(variants)int32dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 42.50 kiB 3.91 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type int32 numpy.ndarray - variant_DS(variants)booldask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 10.62 kiB 0.98 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type bool numpy.ndarray - variant_FS(variants)float32dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 42.50 kiB 3.91 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type float32 numpy.ndarray - variant_HaplotypeScore(variants)float32dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 42.50 kiB 3.91 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type float32 numpy.ndarray - variant_InbreedingCoeff(variants)float32dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 42.50 kiB 3.91 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type float32 numpy.ndarray - variant_MLEAC(variants)int16dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 21.25 kiB 1.95 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type int16 numpy.ndarray - variant_MLEAF(variants)float32dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 42.50 kiB 3.91 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type float32 numpy.ndarray - variant_MQ(variants)float32dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 42.50 kiB 3.91 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type float32 numpy.ndarray - variant_MQ0(variants)int8dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 10.62 kiB 0.98 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type int8 numpy.ndarray - variant_MQRankSum(variants)float32dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 42.50 kiB 3.91 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type float32 numpy.ndarray - variant_QD(variants)float32dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 42.50 kiB 3.91 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type float32 numpy.ndarray - variant_ReadPosRankSum(variants)float32dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 42.50 kiB 3.91 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type float32 numpy.ndarray - variant_allele(variants, alleles)objectdask.array<chunksize=(1000, 2), meta=np.ndarray>

- description :

- List of the reference and alternate alleles

Array Chunk Bytes 169.98 kiB 15.62 kiB Shape (10879, 2) (1000, 2) Dask graph 11 chunks in 2 graph layers Data type object numpy.ndarray - variant_contig(variants)int8dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

- An identifier from the reference genome or an angle-bracketed ID string pointing to a contig in the assembly file

Array Chunk Bytes 10.62 kiB 0.98 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type int8 numpy.ndarray - variant_filter(variants, filters)booldask.array<chunksize=(1000, 1), meta=np.ndarray>

- description :

- Filter status of the variant

Array Chunk Bytes 10.62 kiB 0.98 kiB Shape (10879, 1) (1000, 1) Dask graph 11 chunks in 2 graph layers Data type bool numpy.ndarray - variant_id(variants)objectdask.array<chunksize=(1000,), meta=np.ndarray>

- description :

- List of unique identifiers where applicable

Array Chunk Bytes 84.99 kiB 7.81 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type object numpy.ndarray - variant_id_mask(variants)booldask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 10.62 kiB 0.98 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type bool numpy.ndarray - variant_length(variants)int8dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

- The length of the variant measured in bases

Array Chunk Bytes 10.62 kiB 0.98 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type int8 numpy.ndarray - variant_position(variants)int32dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

- The reference position

Array Chunk Bytes 42.50 kiB 3.91 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type int32 numpy.ndarray - variant_quality(variants)float32dask.array<chunksize=(1000,), meta=np.ndarray>

- description :

- Phred-scaled quality score

Array Chunk Bytes 42.50 kiB 3.91 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type float32 numpy.ndarray - variant_set(variants)objectdask.array<chunksize=(1000,), meta=np.ndarray>

- description :

Array Chunk Bytes 84.99 kiB 7.81 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 2 graph layers Data type object numpy.ndarray - variant_contig_name(variants)objectdask.array<chunksize=(1000,), meta=np.ndarray>

Array Chunk Bytes 84.99 kiB 7.81 kiB Shape (10879,) (1000,) Dask graph 11 chunks in 8 graph layers Data type object numpy.ndarray - Population(samples)object'GBR' 'GBR' 'GBR' ... 'GIH' 'GIH'